Merge branch 'pure_fork_join' into 'master'

Change to pure fork-join task model See merge request !11

Showing

lib/pls/src/internal/scheduling/task.cpp

0 → 100644

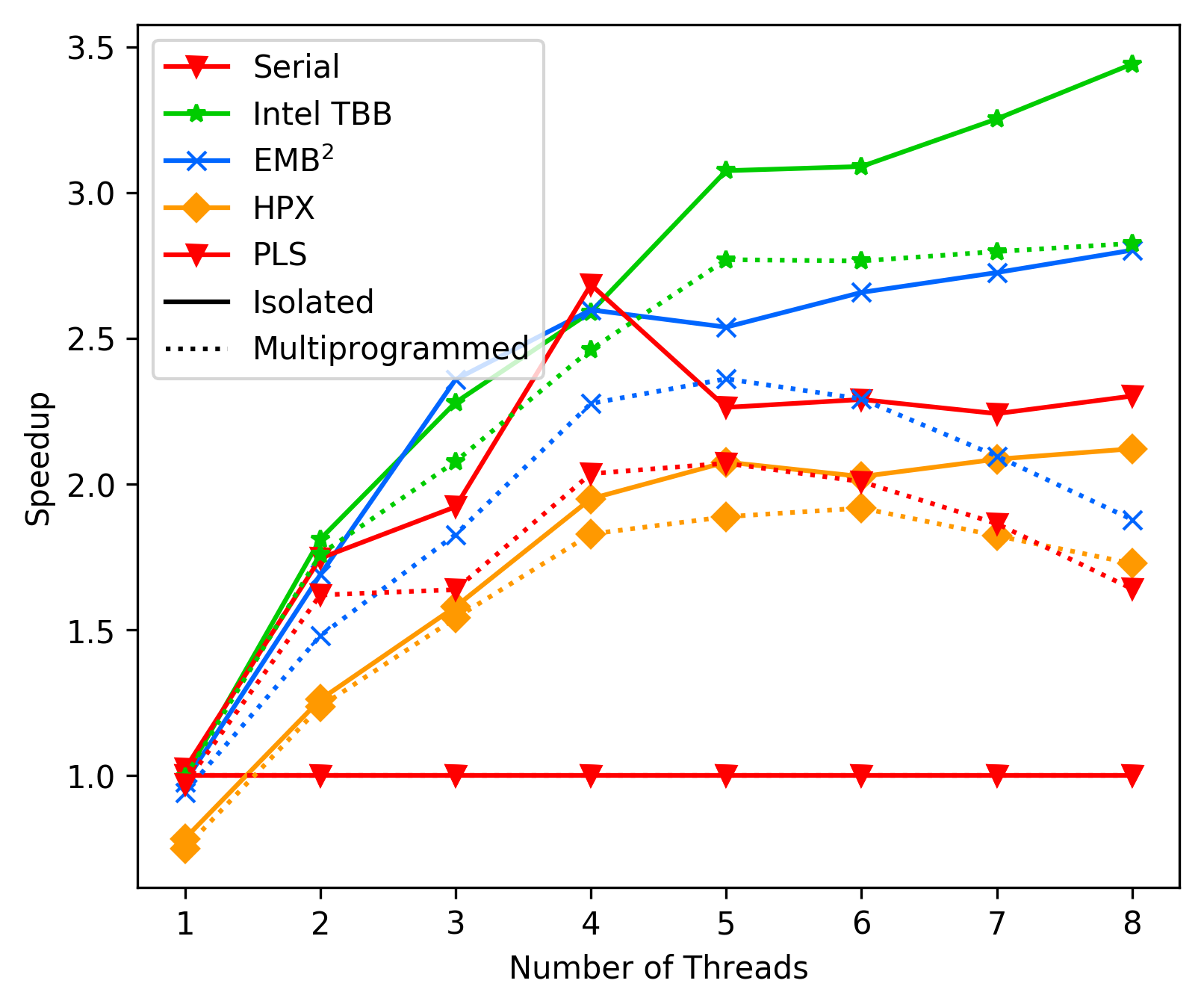

media/3bdaba42_fft_average.png

0 → 100644

195 KB

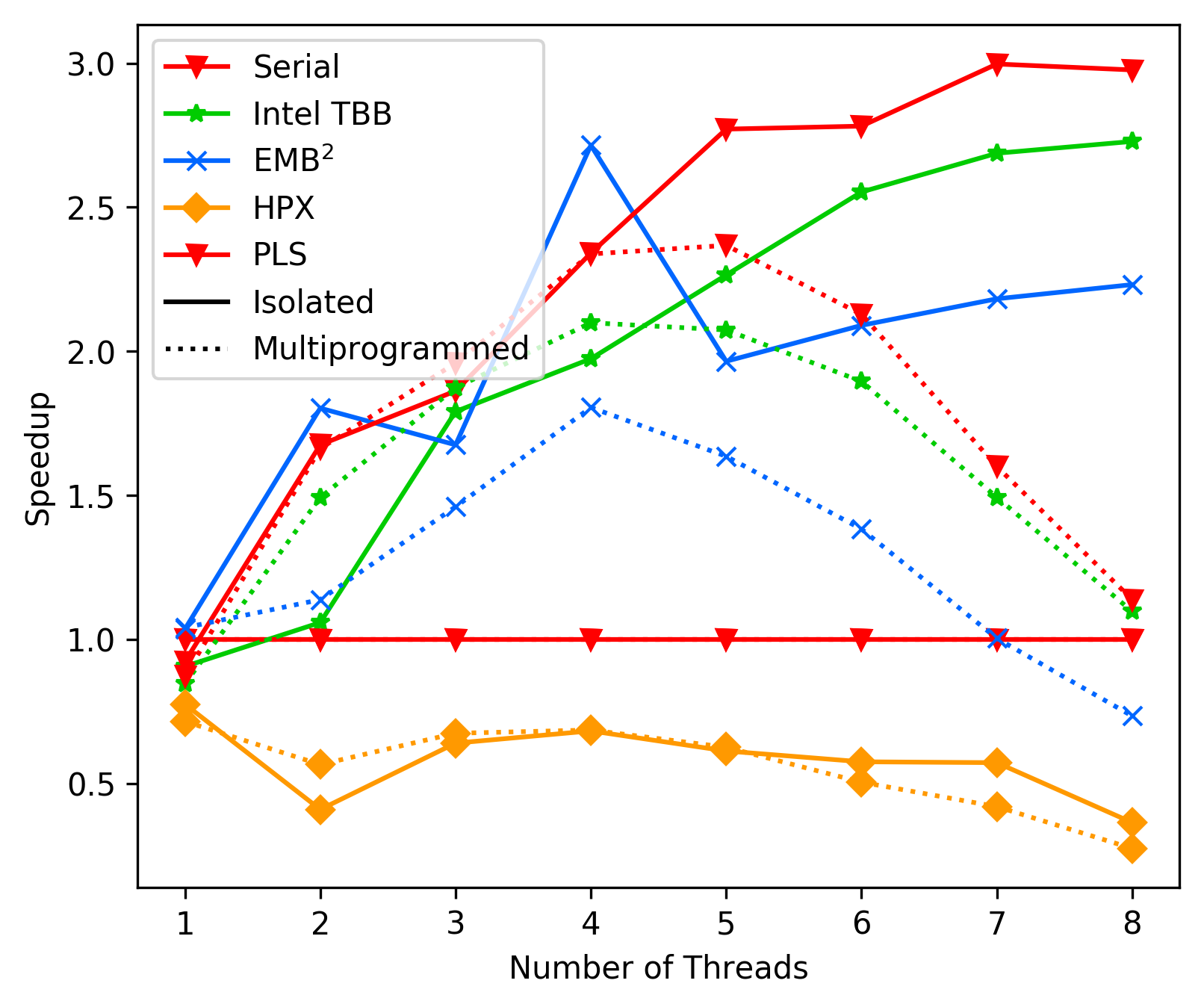

media/3bdaba42_heat_average.png

0 → 100644

201 KB

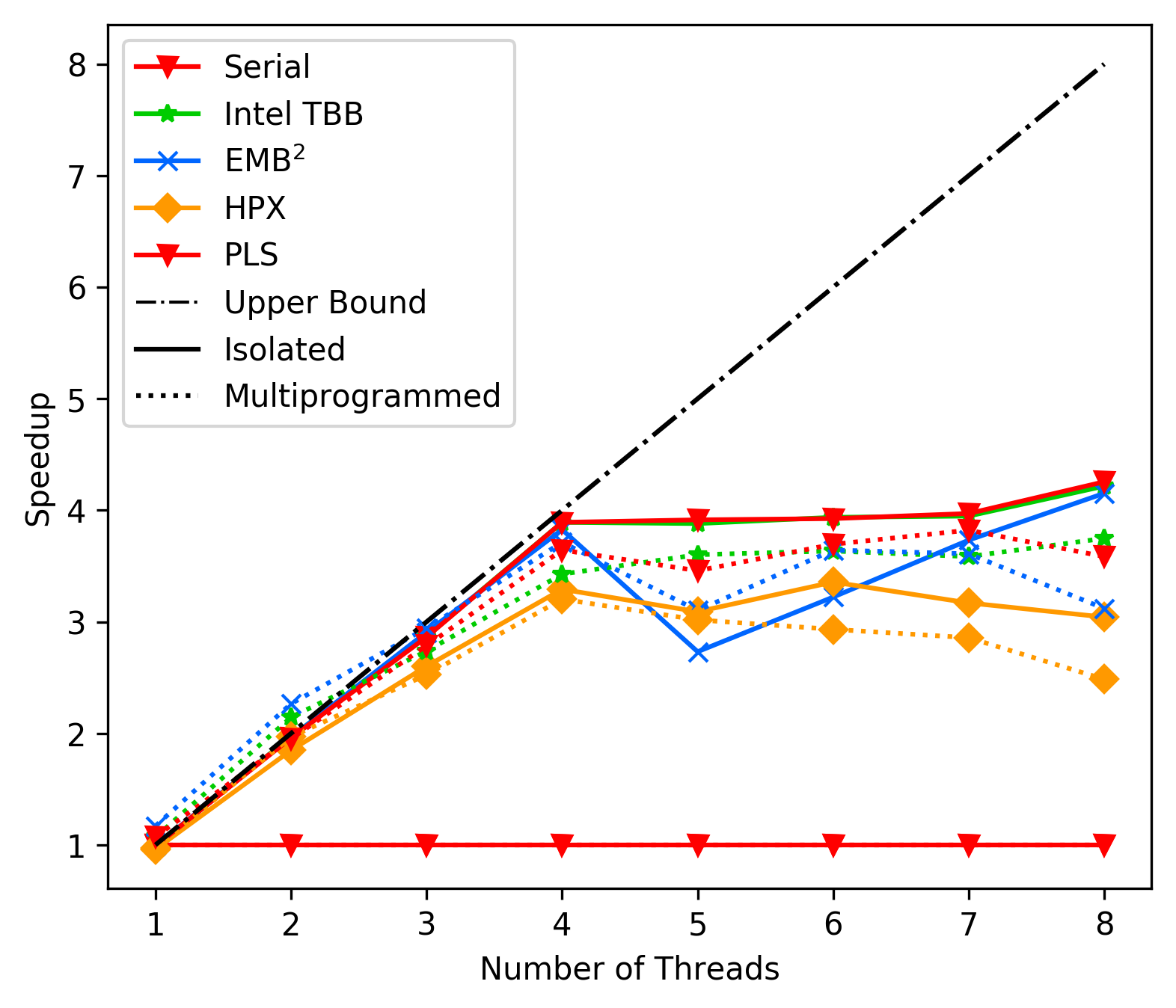

media/3bdaba42_matrix_average.png

0 → 100644

179 KB

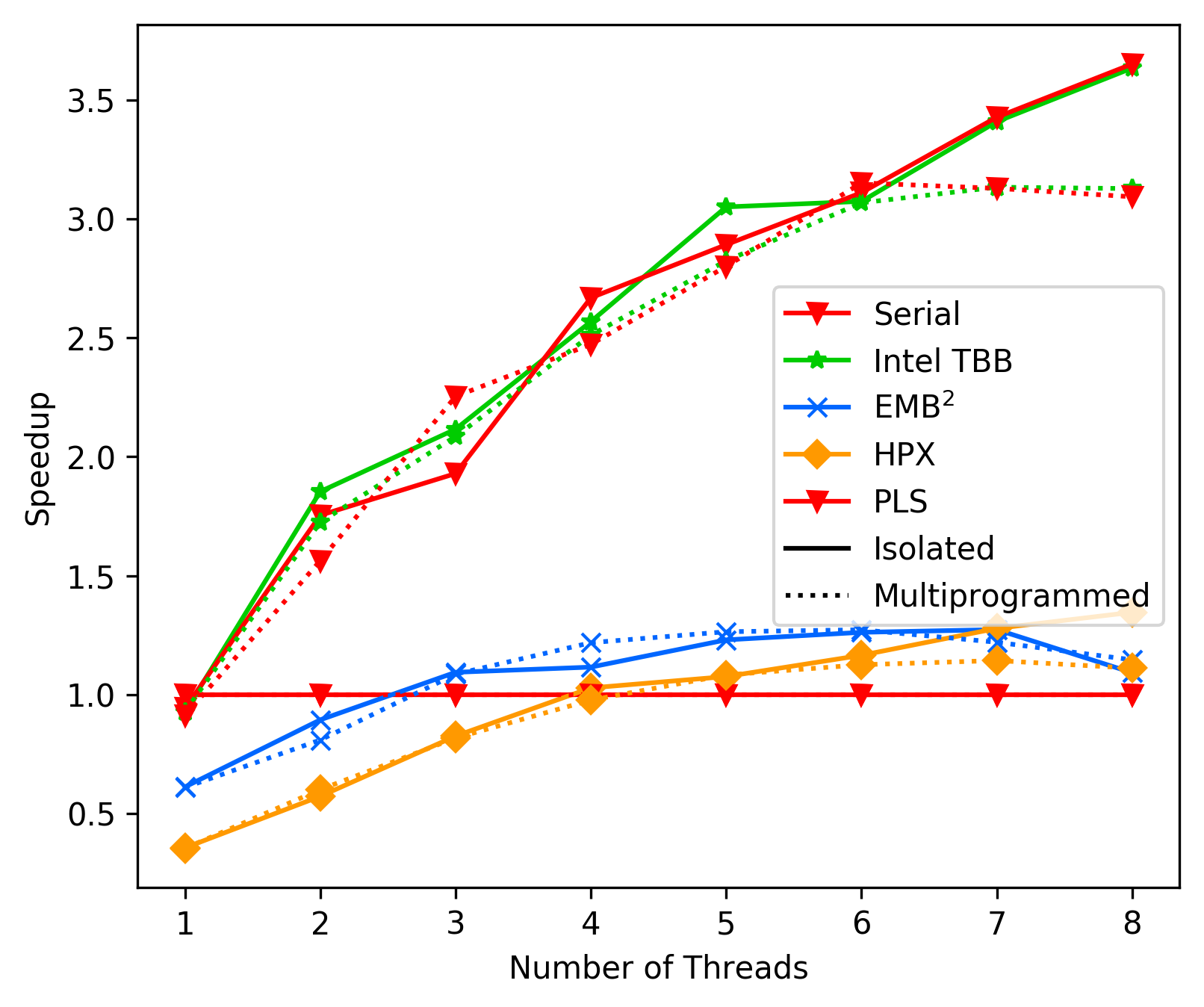

media/3bdaba42_unbalanced_average.png

0 → 100644

175 KB

media/5044f0a1_fft_average.png

0 → 100644

193 KB

Please

register

or

sign in

to comment