Minor changes for profiling and add more alignment.

The idea is to exclude as many sources as possible that could lead to issues with contention and cache misses. After some experimentation, we think that hyperthreading is simply not working very well with our kind of workload. In the future we might simply test on other hardware.

Showing

File moved

PERFORMANCE-v2.md

0 → 100644

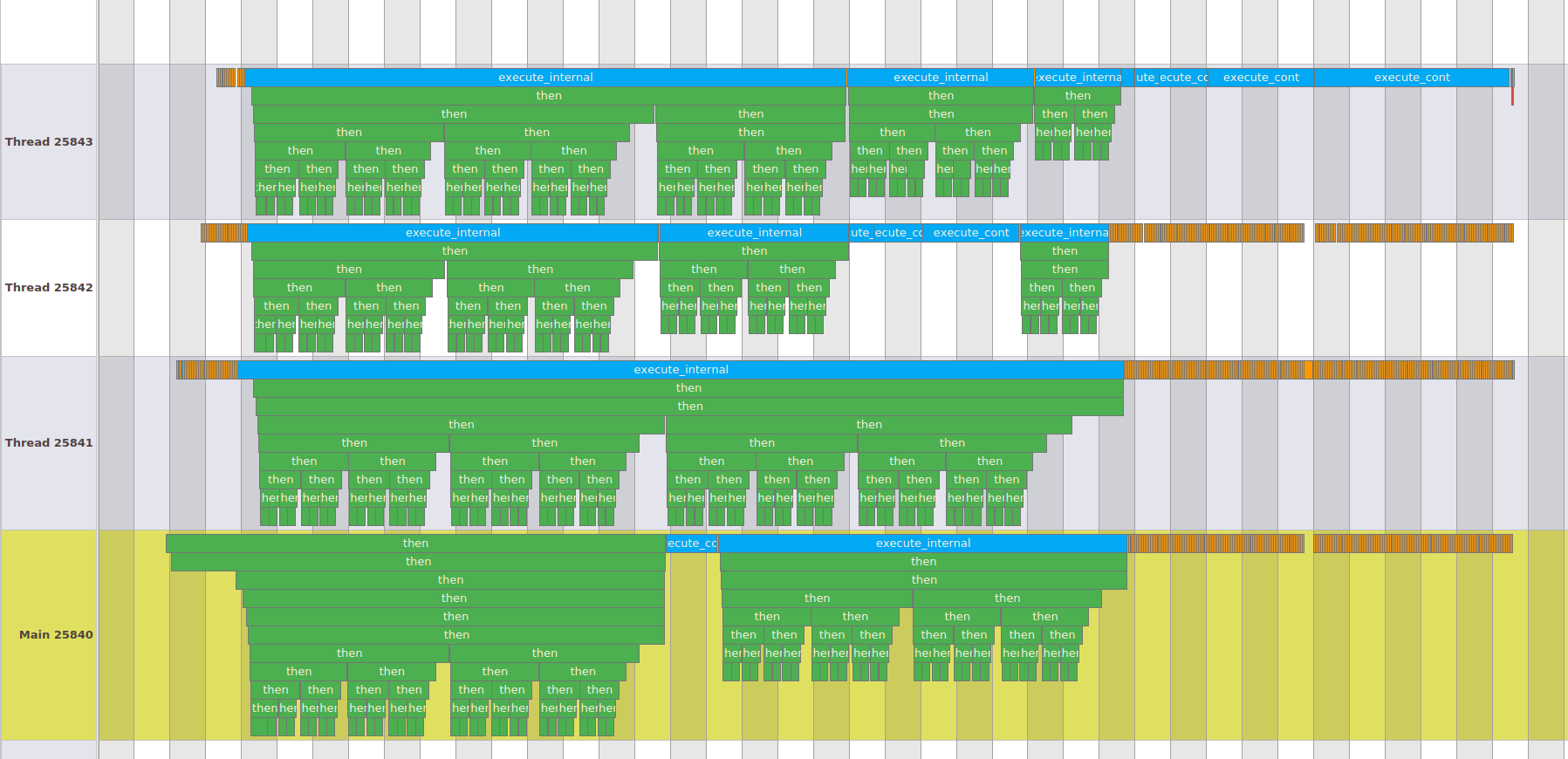

media/e34ea267_fft_execution_pattern.png

0 → 100644

85.4 KB

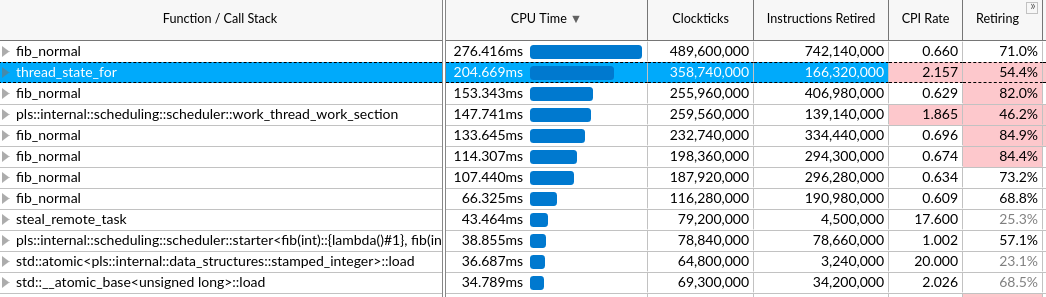

media/e34ea267_thread_state_for.png

0 → 100644

93.6 KB

Please

register

or

sign in

to comment