Remove notes fol PLS_v1.

If required, they are still in the history. However, they are not up to date and thus mostly confuling.

Showing

PERFORMANCE-v1.md

deleted

100644 → 0

PERFORMANCE-v2.md

deleted

100644 → 0

190 KB

media/116cf4af_fft_average_sleep.png

deleted

100644 → 0

183 KB

media/116cf4af_fft_vtune.png

deleted

100644 → 0

143 KB

media/116cf4af_heat_average.png

deleted

100644 → 0

198 KB

176 KB

185 KB

media/116cf4af_matrix_vtune.png

deleted

100644 → 0

150 KB

media/18b2d744_fft_average.png

deleted

100644 → 0

190 KB

173 KB

71.2 KB

75.7 KB

75.5 KB

71.3 KB

media/3bdaba42_fft_average.png

deleted

100644 → 0

195 KB

media/3bdaba42_heat_average.png

deleted

100644 → 0

201 KB

media/3bdaba42_matrix_average.png

deleted

100644 → 0

179 KB

175 KB

media/5044f0a1_fft_average.png

deleted

100644 → 0

193 KB

media/7874c2a2_pipeline_speedup.png

deleted

100644 → 0

187 KB

media/aa27064_fft_average.png

deleted

100644 → 0

197 KB

177 KB

185 KB

174 KB

184 KB

214 KB

200 KB

196 KB

media/b9bb90a4-laptop-best-case.png

deleted

100644 → 0

194 KB

media/cf056856_fft_average.png

deleted

100644 → 0

185 KB

178 KB

media/d16ad3e_fft_average.png

deleted

100644 → 0

195 KB

85.4 KB

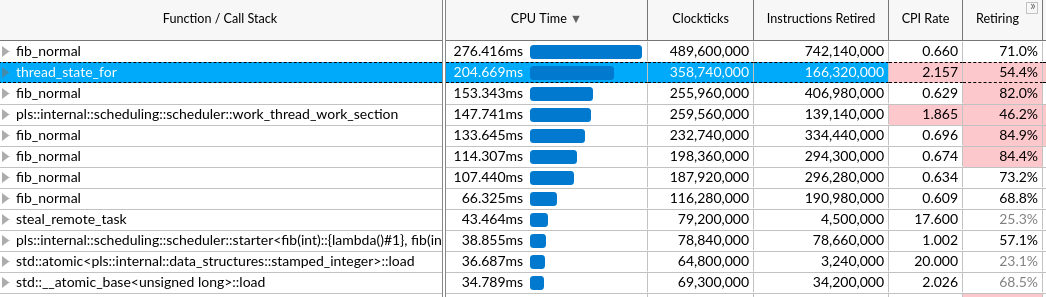

media/e34ea267_thread_state_for.png

deleted

100644 → 0

93.6 KB

Please

register

or

sign in

to comment